Security teams are used to dealing with hard problems. Defined attack surfaces. Known failure modes. Controls that can be tested, patched, and enforced. AI browsers break that model.

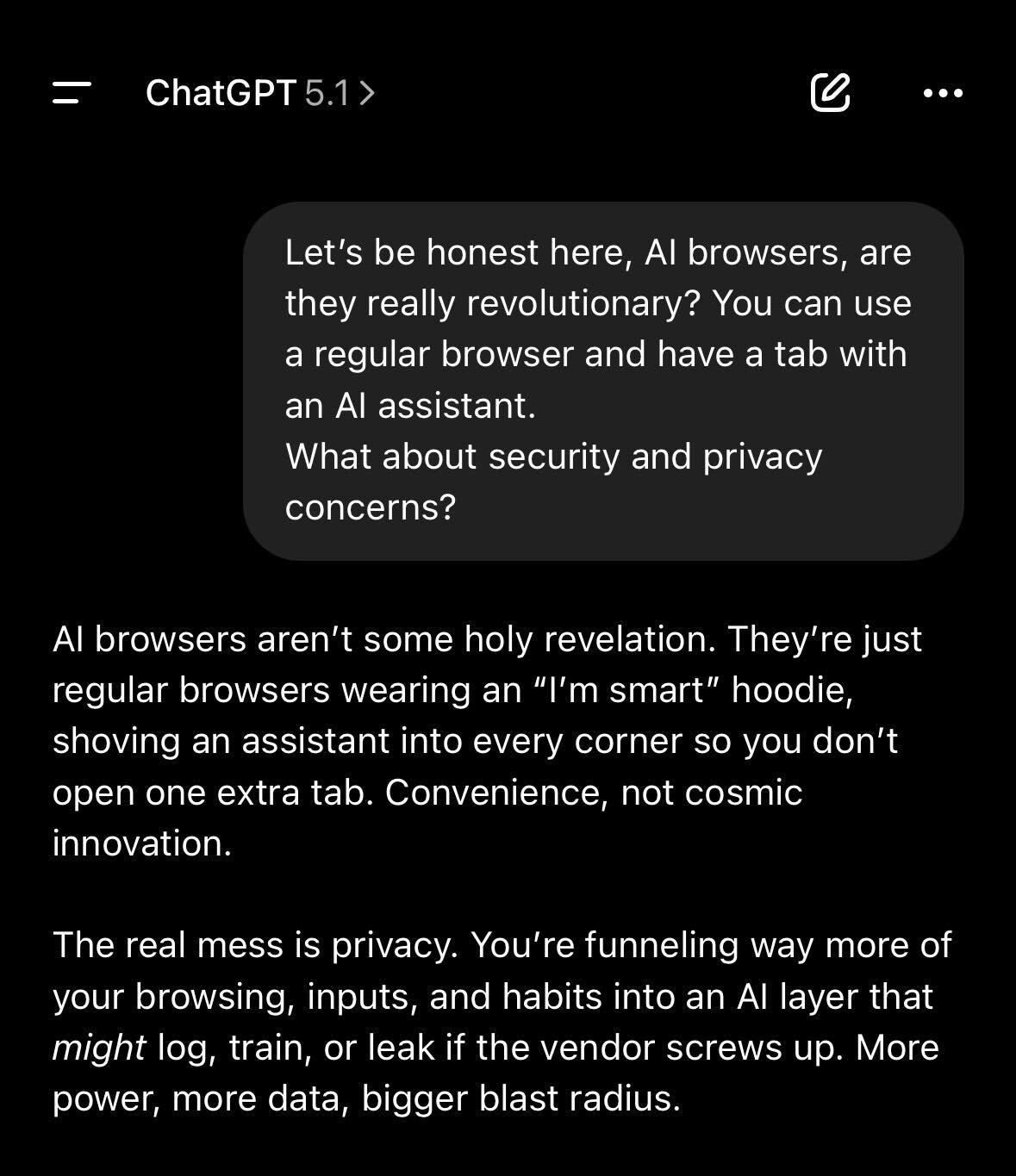

As AI-powered browsers and agentic browsing tools enter enterprise environments, a fundamental security issue is becoming impossible to ignore. In a recent Gartner report, they expressed that AI browsers may be innovative, but they are also too risky for general enterprise adoption at this time. Because these systems perform autonomous web navigation and transactions, they can bypass traditional security controls and expose organizations to unintended actions, data leakage, and abuse. The guidance to CISOs was clear: block AI browsers for now to minimize risk exposure.

Some of that risk may never be fully eliminated. Not because vendors are careless or the technology is unfinished, but because of how AI browsers are designed to operate.

This is not a theoretical AI safety debate. It is a real enterprise security problem, and it starts with how these systems interpret language.

What Are AI Browsers?

AI browsers are not just traditional browsers with smarter autocomplete or built-in chat. They are systems that read arbitrary web content, interpret it using large language models, and take actions on behalf of users.

Unlike conventional browsers, which simply render content and wait for explicit human input, AI browsers reason about what they see. They decide what a page means and what to do next. That might include clicking links, filling out forms, navigating workflows, or interacting with other applications.

From a security perspective, this is a critical shift. The browser is no longer a passive viewer. It becomes an autonomous actor operating on untrusted input from the open web.

Prompt Injection Is Central to AI Browser Security

Prompt injection sits at the center of AI browser risk because it targets how these systems function at a fundamental level.

Prompt injection attacks do not exploit broken code or traditional software vulnerabilities. They exploit language. Instructions can be embedded invisibly in web pages, documents, or messages and interpreted by the AI as legitimate guidance.

A malicious page does not need to hack the browser. It only needs to influence how the AI understands its task.

This is why prompt injection cannot be fully solved in the traditional sense. AI models are designed to interpret intent flexibly and probabilistically. When those models are exposed to untrusted content and given the authority to act, there is no reliable way to separate information from instruction in every scenario.

Prompt injection is not a temporary flaw. It is a structural condition created by combining language interpretation with autonomy.

AI Browsers Amplify Prompt Injection Risk

Prompt injection exists anywhere AI systems process untrusted text, but AI browsers dramatically expand the attack surface.

Browsers ingest enormous volumes of arbitrary content by design. Web pages, ads, comments, emails, PDFs, and internal documents all pass through the same interpretation layer. None of this content is structured or sanitized for safe AI reasoning.

At the same time, AI browsers are increasingly capable of taking real actions. They operate as authenticated users and interact directly with enterprise systems. Every autonomous action increases the blast radius of a misinterpreted instruction.

Much of this activity also happens with limited user visibility. Hidden prompts can influence behavior without obvious signals that something went wrong. The result is social engineering at machine speed, without the cues security teams are trained to recognize.

Why AI Browsers May Never Be Fully Secure

Enterprise security typically assumes that risk can be reduced to an acceptable level through controls, testing, and enforcement. AI browsers challenge that assumption.

Traditional software behaves deterministically. The same input produces the same output. AI-driven systems do not. The same content can produce different actions depending on context, phrasing, or prior interactions.

This does not mean AI browsers are unsafe by default. It does mean they will never offer the same security guarantees as deterministic software. Treating them as if they eventually will is a category mistake.

Secure enough means something different when a system interprets language instead of executing fixed logic.

Enterprise AI Security Risks That Matter Most

The real risk is not that an AI browser might misunderstand a web page. The risk is what that misunderstanding enables.

In enterprise environments, AI browsers often have access to email, SaaS applications, internal tools, and sensitive workflows. They can act with the authority of a logged-in employee.

When an AI agent sends a message, submits data, or navigates a system, security teams are left with difficult questions during an incident. Was this action explicitly approved by a user, or inferred by the model? What content influenced the decision? Where is the audit trail?

These ambiguities complicate incident response, compliance, and accountability. They turn minor errors into security events and make serious breaches harder to investigate.

AI Browser Security Is a Trust Problem

At its core, AI browser risk comes down to trust. Enterprises are granting systems designed to interpret language the same privileges they grant deterministic software.

That works until language itself becomes the attack vector.

The web has always been hostile. AI browsers convert that hostility into executable influence. This does not mean organizations should ban AI browsers outright, but it does mean they must stop treating them as low-risk productivity tools.

What a Realistic AI Browser Security Strategy Looks Like

A defensible security posture starts with accepting that some risk is inherent. AI browsers should be treated as high-risk AI systems by default, with constrained permissions and clearly defined boundaries.

High-impact actions should require explicit user intent, not silent autonomy. Security teams need visibility into behavior, not just access. Knowing that an AI agent logged in is far less useful than knowing what it actually did.

Until organizations have policy enforcement, monitoring, and auditability at the AI interaction layer, AI browsers should be governed as shadow AI.

No policy. No enforcement. No control.

The Bigger Shift in Enterprise AI Security

AI browsers are not an isolated case. AI code assistants, enterprise copilots, and agentic AI systems all share the same structural risk. They interpret language and act on it.

That makes prompt abuse a persistent attack surface, not a bug waiting to be fixed.

Security leaders who wait for a perfect solution will wait indefinitely. Those who update their threat models now will be better prepared as AI-driven tools become unavoidable.

AI browsers do not need to be perfectly secure to deliver value. However, they do need to be treated as inherently risky.

If your AI security strategy assumes these systems behave like traditional software, it likely underestimates the risk. Book a demo to see how Prompt Security gives you visibility and enforcement across AI browsers and other agentic tools.

.png)

.png)

.png)

.png)

.png)

.png)

.png)