The Platform for

AI Security

SECURE YOUR AI. EVERYWHERE IT MATTERS.

AI introduces a new array of security risks

We would know. As core members of the OWASP research team, we have unique insights into how AI is changing the cybersecurity landscape.

Introducing prompt Security

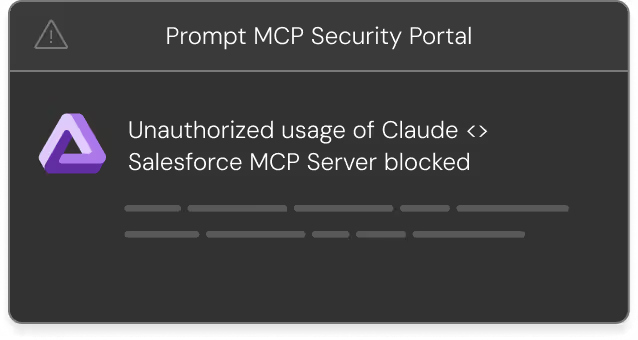

Prompt Security defends against AI risks at all levels

A complete solution for safeguarding AI at every touchpoint in the organization

Enterprise-Grade AI Security

Fully LLM-Agnostic

Seamless integration into your existing AI and tech stack

Getting started with Prompt Security is fast and easy, regardless of how your tech stack looks like.

Cloud or self-hosted deployment

It's your choice. Prompt Security can be delivered as SaaS or on-premises based on your unique needs.

Trusted by Industry Leaders

Announcing Prompt Security’s Automated AI Red Teaming

AI Risk Assessment Tool

Get instant access to detailed risk assessments powered by Prompt Security's specialized scoring methodology. Whether you're evaluating popular AI tools or assessing MCP servers, our platform provides transparent risk scores, parameter breakdowns, and certification status checks.

Time to see for yourself

See how organizations are securely enabling AI with

Prompt Security

.avif)

.png)