The Platform for

GenAI Security

Focus on innovating with Generative AI,

not on securing it.

AI Security Powering the Most Innovative Companies Worldwide

EXPLORE RISKS

AI introduces a new array of security risks

We would know. As core members of the OWASP research team, we have unique insights into how Generative AI is changing the cybersecurity landscape.

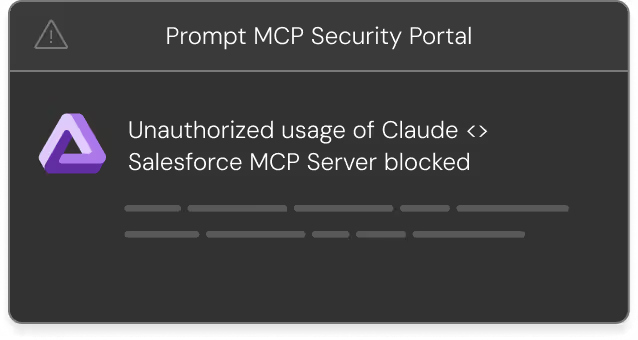

Introducing prompt Security

Prompt Security defends against AI risks at all levels

A complete solution for safeguarding AI at every touchpoint in the organization

EASILY Deploy IN MINUTES & get instant protection and insights

Enterprise-Grade GenAI Security

Fully LLM-Agnostic

Seamless integration into your existing AI and tech stack

Getting started with Prompt Security is fast and easy, regardless of how your tech stack looks like.

Cloud or self-hosted deployment

It's your choice. Prompt Security can be delivered as SaaS or on-premises based on your unique needs.

Trusted by Industry Leaders

Latest Blog

July 8, 2025

Shaping the Conversation: Our Top 4 LinkedIn Newsletter Picks on AI

Stay ahead in AI with four must-follow LinkedIn newsletters that deliver big ideas, real-world strategies, and deep technical dives - all in one place.

Latest News

Time to see for yourself

Learn why companies rely on Prompt Security to protect both their own GenAI applications as well as their employees' Shadow AI usage.

.avif)

.avif)

.avif)