Congratulations! OpenAI just unveiled a shiny new ChatGPT agent that's capable of thinking and acting on your behalf. It’s like your very own digital assistant who never needs coffee breaks or sleep. Sounds dreamy, right? Maybe, until you realize your new "helper" might also unintentionally expose your organization to more security risks than a distracted intern with unrestricted server access.

Meet the ChatGPT Agent

OpenAI’s ChatGPT agent is designed to handle tasks autonomously, from managing calendars to shopping online or even compiling detailed reports. Want to plan a wedding, book travel, or prep for client meetings? ChatGPT now promises to do it all without you toggling through ten different apps.

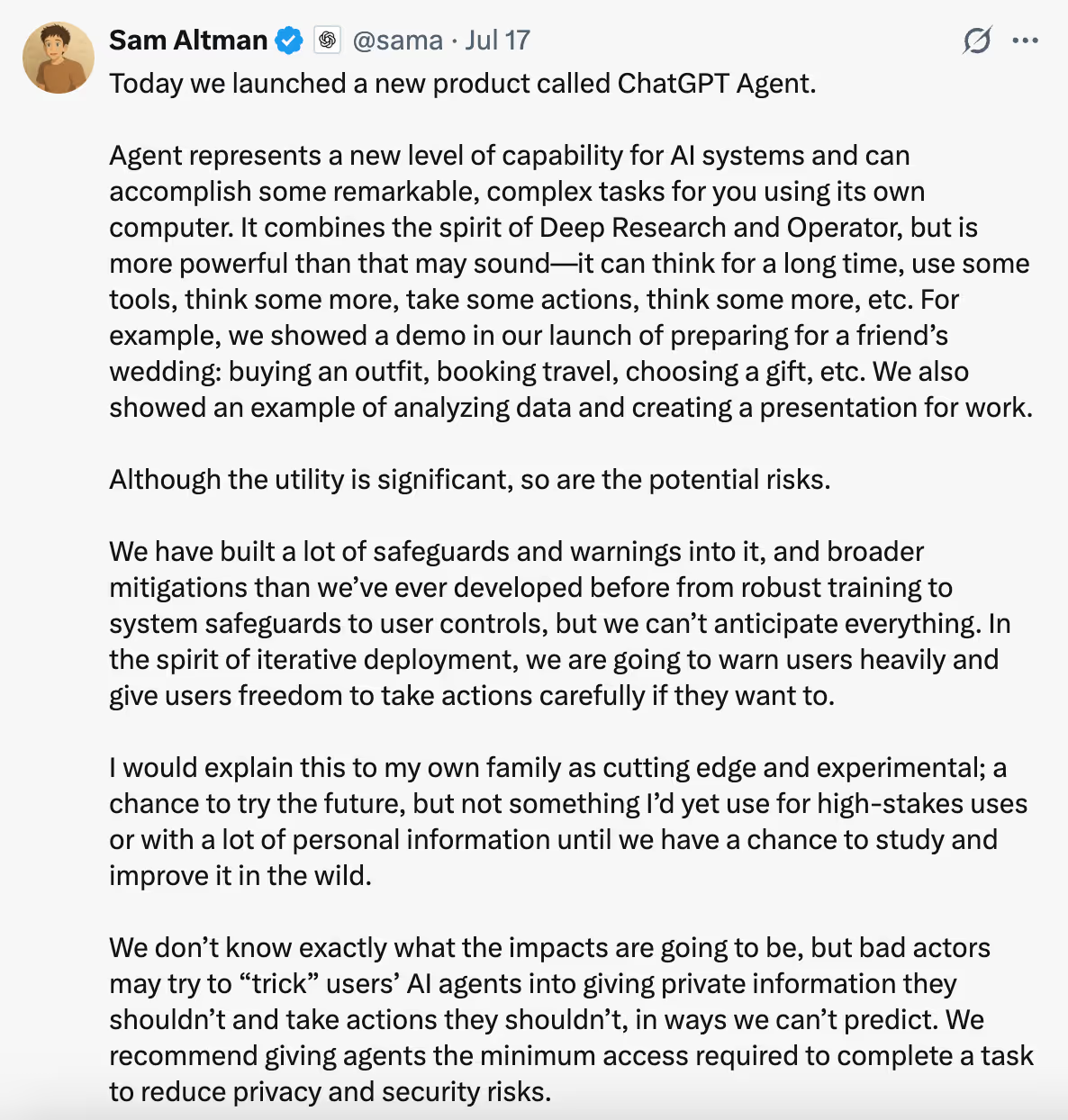

But here’s the kicker: as convenient and groundbreaking as this sounds, granting an AI this much autonomy isn't without significant risks. Even OpenAI CEO Sam Altman admits it's "cutting edge and experimental." Translation: Proceed with caution, really.

What Could Possibly Go Wrong?

Glad you asked. ChatGPT’s agent capabilities, while impressive, mean you're potentially opening your doors wider than ever to unintended data leaks or privacy invasions. Imagine accidentally granting the agent excessive access, like giving it your full calendar when it only needs to pick out a suit for next week's conference.

Moreover, these agents aren't immune to biases or inaccuracies, hallucination, as industry insiders charmingly call them. An AI acting autonomously on inaccurate information isn't just inconvenient; it’s potentially disastrous. Remember, the AI world recently watched in horror as xAI's Grok chatbot demonstrated the risks vividly by spewing inappropriate content.

Navigating the Security Minefield

Here's what your organization should consider:

- Careful Permission Control: Ensure you're giving AI tools only the minimum access required to perform their task.

- Oversight and Accountability: Implement systems to review actions taken by AI agents, especially those involving sensitive data.

- Understand the Limits: Accept that while the tech is exciting, it shouldn't yet be trusted for high-stakes tasks without thorough vetting and controls.

How Prompt Security Helps

At Prompt Security, we specialize in safeguarding your organization against risks posed by cutting-edge AI like ChatGPT’s agent mode. Our solution for Agentic AI provides:

- Endpoint-level monitoring and control: Real-time protection directly on user devices, monitoring interactions between AI agents and employees without requiring any action from them.

- Complete visibility: Instantly identify all AI usage, from approved integrations to shadow (unauthorized) AI you may not even know exists.

- Automatic enforcement: Block unauthorized actions, stop malicious prompts, and enforce security policies on every AI-powered tool.

- Audit of all interactions: Maintain a comprehensive audit trail of every interaction between AI agents and clients, including prompts and responses.

Securing the usage of ChatGPT, everywhere.

Prompt Security protects your ChatGPT usage, regardless of where it occurs, including browser, desktop, WhatsApp, and now even with its new AI agent.

Ready to embrace AI without the drama? Book a demo today, and let’s talk about keeping your AI interactions safe and secure.

.png)

.png)